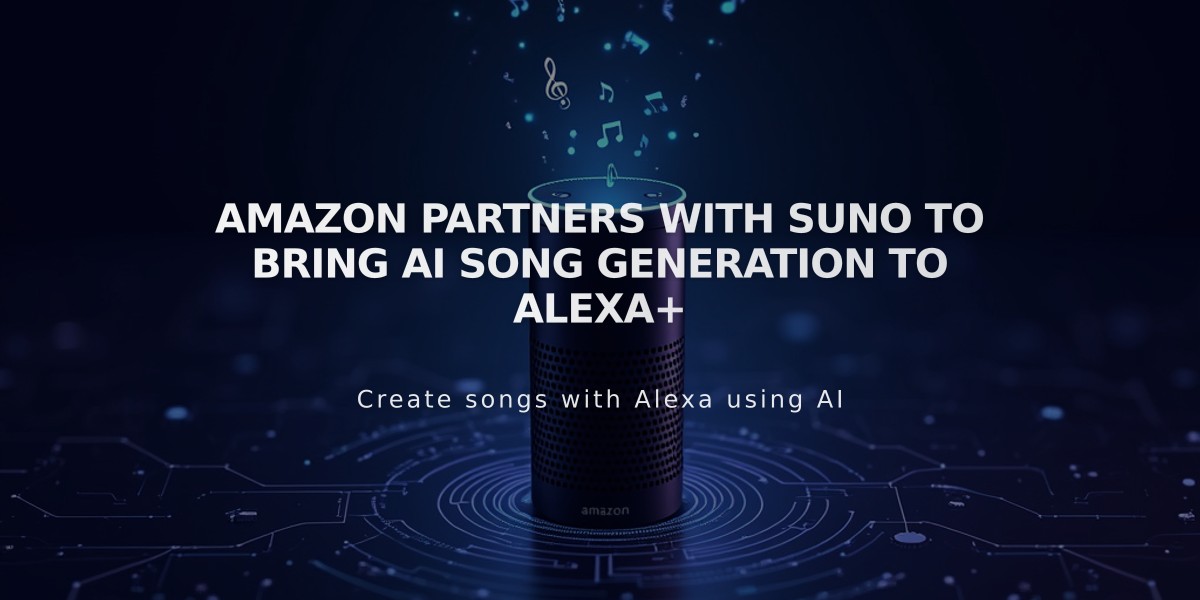

ByteDance Pulls TaylorSwift AI Demo After Eerily Real Performance on OmniHuman Platform

ByteDance's TikTok parent company has developed OmniHuman-1, an advanced AI video generation framework that can create eerily realistic videos from single images, complete with synchronized audio and movement.

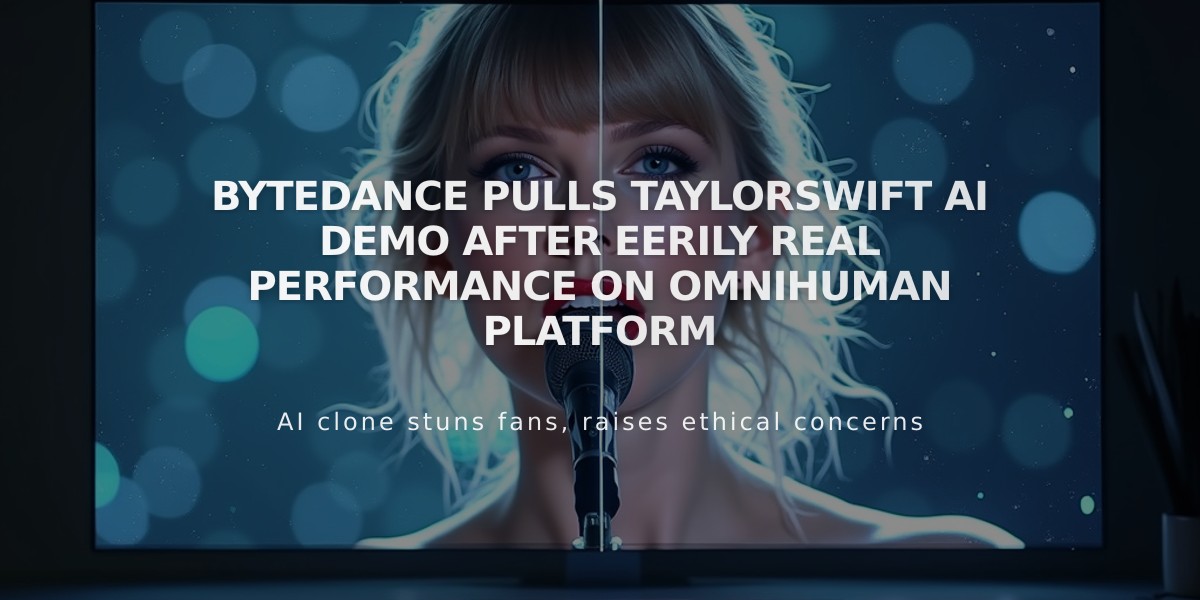

Taylor Swift AI deepfake screenshot

The technology can generate videos of people singing, dancing, and speaking, with particularly convincing results for musical performances. Among the demonstrated examples were AI-generated videos of Taylor Swift performing songs she never actually sang, as well as footage of a young Albert Einstein speaking.

Key features of OmniHuman-1 include:

- Multimodal video generation from single images

- Synchronized audio and lip movement

- Support for various music styles and body poses

- Ability to handle high-pitched songs

- Different motion styles for different music types

While the technology produces remarkably realistic results, some telltale signs of AI generation remain:

- Blurry or bokeh backgrounds

- Slightly jerky movements

- Minor lip-sync misalignments

ByteDance's advantage in developing this technology stems from its access to vast amounts of TikTok and Douyin user data, including millions of hours of people dancing, singing, and performing. This extensive dataset allows for more precise training of human movement patterns in AI-generated videos.

The rapid advancement of this technology raises important considerations about the potential for creating increasingly convincing deepfake content, as the barrier to creating such videos becomes lower.

Streaming apps shown on TV screen

Related Articles

Anthropic Secures Massive $3.5B Investment, Reaching $61.5B Valuation